Programming Languages and Database

- Python focused on data analysis.

- Python Web scraping (Beautiful Soap, Scrapy).

- SQL for data extraction.

- SQLite Database.

- Software SAS Entreprise Guide.

- Qlik Script Language.

My name is Deivison Morais, I have a degree in civil production engineering and since 2010 I have been working in the area of appraisal and expertise engineering. However, at the end of 2018, when I read a book, completely by chance, my career transition process began began. Definitely, reading the book Industry 4.0 Concepts and Fundamentals was a great turning point in my life !! The awakening to all the great technological transformations that are taking place simultaneously since the beginning of the fourth industrial revolution made me look for a new professional and life purpose purpose.

The idea that all evolution of a species occurs through a process of adaptation is unique. My natural path of adaptation was to seek more knowledge about this new technological revolution and so in March 2019 I started the MBA in Industry 4.0 at PUC-Minas, being, at the time, the first MBA course on this subject authorized in Brazil.

The MBA offered an excellent overview of the fundamental pillars of Industry 4.0 and the ones that most caught my attention were artificial intelligence and big data analytics. After finishing the course in June 2020, I realized that my vocation and my natural path was to continue delving into these two areas and that's where I found data science as my new profession.

Since 2020 I have been building this new chapter of my career. During this transformation process the journey has been incredible, which makes me sure why I became a data scientist.

Engaged as a data scientist in the development of end-to-end data solutions in the legal domain.

Created dashboards using the Qlik Sense Enterprise tool to serve various sectors of the Court.

Constructed process flowcharts with the aim of developing automated data solutions.

Developed statistical technical reports/studies.

Engagement as a data analyst in the Integrated Demand and Supply Planning sector of the Logistics management.

Development of dashboards using Power BI tool catering to the logistics area.

Mapping of processes and construction of flowcharts with the aim of developing automated data solutions.

Development of data projects with the assistance of SAS software catering to the logistics area.

Consulting projects for companies in the retail and technology sectors.

End-to-end data projects - product segmentation (data extraction, cleansing, preparation, modeling, and deployment).

Data analysis projects using SAP system database - healthcare sector company.

Elaboration of data solutions for business problems, simulating real world challenges, using public data from Data Science competitions. The projects were built from the analytical interpretation of the problem, through the structuring and execution of the business solution to the publication of the algorithm trained in production, using Cloud Computing tools.

Regarding the execution of these projects, it is noteworthy that statistical techniques were used to analyze the data, as well as the use of machine learning models to solve business problems. A detailed description of each of these projects can be found in the next section of this portfolio.

I would like to highlight that throughout my career I have acquired experience in the preparation of technical reports for the patrimonial evaluation of urban and rural properties inserted in the area of influence of infrastructure projects (roads, railways, pipelines).

During my professional practice I also worked carrying out economic evaluations of commercial establishments subject to expropriation (Calculating Trade Fund and Business Interruption), whose main objective is to facilitate strategic decision-making in projects involving large areas to be expropriated. In the article "A Importância das Avaliações Econômicas no Estudo de Viabilidade para Projetos que Envolvam Desapropriações em Áreas Urbanas" presented at the XX COBREAP (XX Brazilian Congress of Assessment and Expertise Engineering) I detail through a case study one of the projects of this nature in the which I worked.

I participated in the team of evaluating engineers of the consulting company that was assisting the Renova Foundation in the calculation of the patrimonial and economic indemnities of those impacted by the failure of the Fundão dam of Mineradora Samarco in Mariana-MG in 2015. Due to the nature of the multiple impacts resulting from of the event over 40 municipalities I was able to work with evaluations of the most varied types, which I can highlight with a unique experience.

During my professional development process, I carried out technical inspections and inspections for different purposes in new, old or under construction buildings. In addition, I worked in risk engineering, identifying situations that could put the safety of users and assets at risk.

Experience in technical coordination of service contracts for land management sectors of large mining companies. In performing this role, I had to interact with several internal and external stakeholders simultaneously, which is very challenging professionally.

Among the various responsibilities with internal stakeholders, I mention organizing and planning the work flow of the teams in the field and office in view of the established deadline, quality and cost, training and qualifying new employees, managing people, responding to the board in view of the goals and results.

With regard to external stakeholders, I emphasize that I was responsible for assisting analysts, coordinators and managers, developing new technical products adjusted to meet specific issues, agreeing deadlines for delivery and performance of services, presenting results, coordinating fieldwork in communities impacted by the mining activity.

The works of an expert area demand answering several types of questions, among which I highlight: What is this problem in my building? What is the cause of this infiltration in my apartment? Do these fissures and cracks put my physical or property security at risk? How much does it cost to fix the problem? To answer these questions, it is necessary for the professional to have the analytical capacity to observe a particular problem situation and build a reasoned analysis of the origin and possible cause and effect relationship on the fact to be clarified. In addition, all the work is formalized through the writing of a technical report that needs to be understood by people who are laymen in engineering.

This project, which forms part of my professional portfolio, seeks to create a business solution that will help the CEO of Rossmann, one of the largest drugstore chains in Europe, to define which stores should be renovated depending on revenue.

I developed a machine learning model in production capable of predicting store sales in the next 6 weeks. In addition, a bot was made available to the CEO in the Telegram application capable of accessing the model in production, so that the CEO can get the sales forecasts of the stores in the palm of his hand.

This project aims to create a business solution that will help the King County government make better decisions regarding the expropriation process of properties that may be directly impacted by new infrastructure projects.

I developed two data products, being a machine learning model capable of calculating the market value of a property, as well as a dashboard with the analysis if the prices offered by real estate agencies in the county are in accordance with the real market value.

If these two products were used, the King County government would reduce its project cost and could make faster strategic decisions about the economic viability of new infrastructure projects.

This project seeks to create a business solution that will help a health insurance company to define which customers should be selected to receive an offer to launch a new service, vehicle insurance. The board established that the previous year's customer database should be used as a data source, as a survey on the intention to take out vehicle insurance was carried out with these policyholders.

Based on historical customer data and research on the intention to take out vehicle insurance, I developed a machine learning model capable of sorting a list of 127,000 new customers in view of the greater probability of taking out insurance.

This project from my professional portfolio aims to collect and analyze information with SQL language tools in the dataset provided by the company Olist. It is worth mentioning that the dataset has around 100,000 orders placed on several e-commerce sites in Brazil.

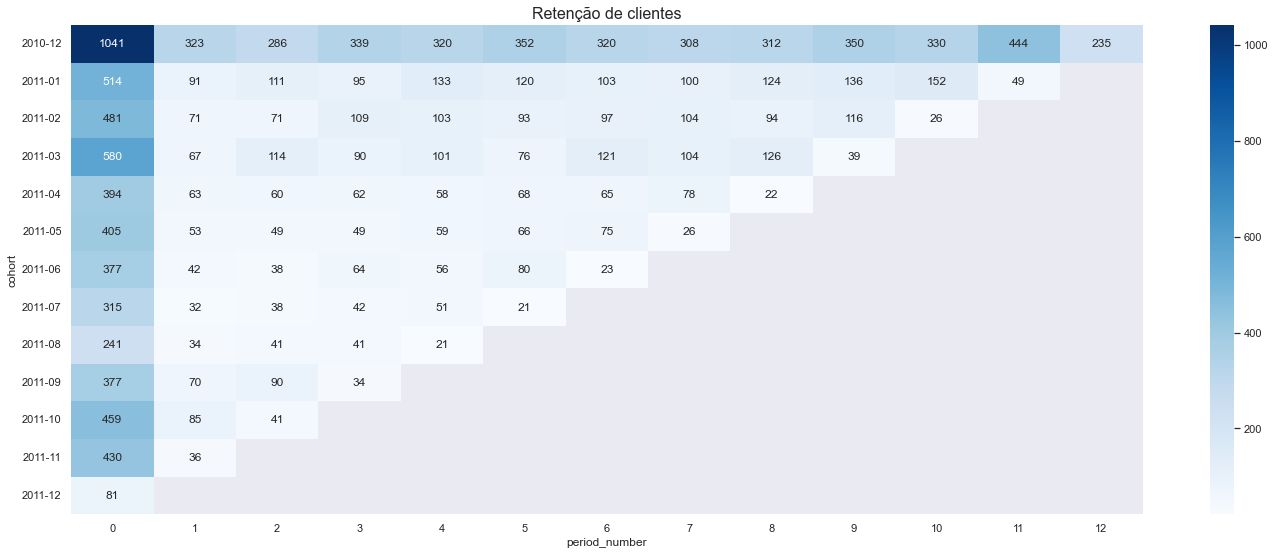

This project aims to perform a customer retention analysis using Cohort on a dataset provided by a UK e-commerce network. It is worth mentioning that the dataset has information on thousands of tax invoices issued in the period of one year.

Customer retention corresponds to an organization's ability to retain its loyal customers over time. Cohort analysis is a powerful tool for identifying patterns and creating business levers within the context of customer retention.

These notes are part of my studies of the book Basic Econometrics 5th edition by Damodar N. Gujarati and Dawn C. Porter. It is noteworthy that in this work there are 13 chapters dedicated to the study of linear regressions within the context of the science of econometrics. Notes consist of examples and exercises explored with python ecosystem tools.

These notes are part of my study of Practical Statistics for Data Scientists 1st Edition by Peter Bruce & Andrew Bruce. It is noteworthy that in this work there are several chapters dedicated to the study of the statistical discipline, focusing on what is widely used in the universe of data science. Notes consist of examples explored with python ecosystem tools.

It should be mentioned that one of the most important skills within the data science universe is web data collection, which is famously known as web scraping. It is noteworthy that the notes consist of study projects prepared with the tools of the python ecosystem, where I collect information in an automated way from websites, e-commerces and travel platforms.

Feel free to send me a message!!! We can get in touch via: